2025-12-18 12:00:00

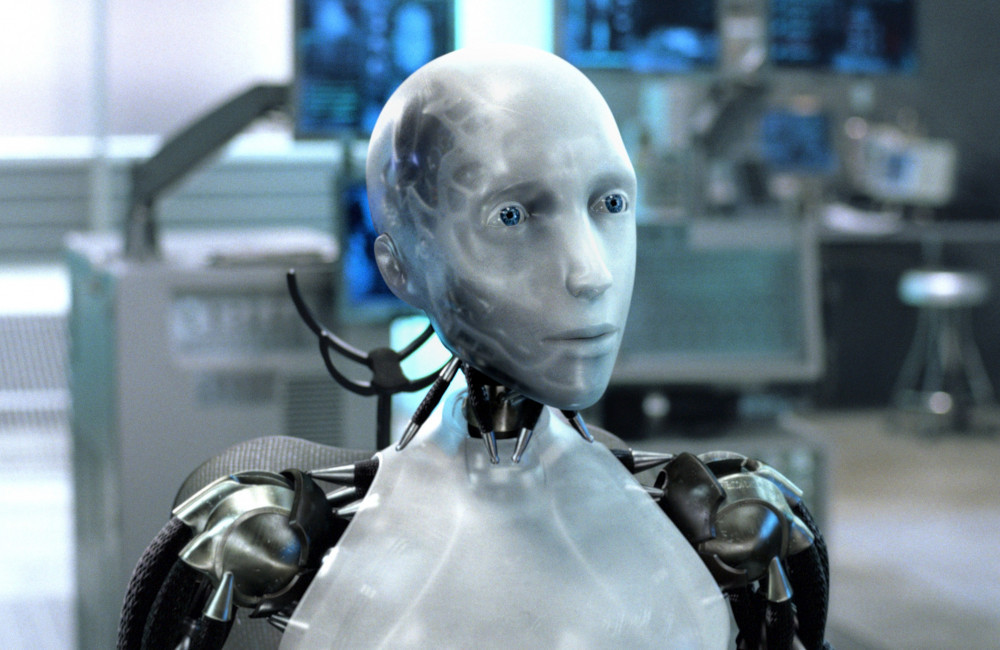

Evidence is “far too limited” to determine whether artificial intelligence (AI) has developed a conscious.

According to Dr. Tom McClelland, a philosopher at the University of Cambridge, the growing claims about sentient machines are running well ahead of what science and philosophy can currently support.

In his view, the only defensible position on AI consciousness is agnosticism.

Dr. McClelland argued that the problem is not simply a lack of data about AI systems, but a much deeper gap in human understanding.

Scientists and philosophers still do not agree on what consciousness actually is, let alone how to measure it.

In the journal Mind and Language, he said: “The main problem is that we don’t have a deep explanation of what makes something conscious in the first place.

“The best–case scenario is we’re an intellectual revolution away from any kind of viable consciousness test.

“If neither common sense nor hard–nosed research can give us an answer, the logical position is agnosticism. We cannot, and may never, know.”

AI developers are pouring billions into the pursuit of artificial general intelligence – machines capable of matching or exceeding human performance across a wide range of tasks.

Alongside this, some researchers and technology leaders have suggested that increasingly advanced AI systems could become conscious, meaning they might have subjective experiences or self-awareness.

Dr. McClelland said such claims rest on unresolved philosophical disagreements.

One camp argues that consciousness arises from information processing alone, meaning a machine could be conscious if it ran the right kind of “software”.

Another insists consciousness is inherently biological and tied to living brains, meaning AI can only ever imitate it.

McClelland explained: “Until we can figure out which side of the argument is right, we simply don’t have any basis on which to test for consciousness in AI.”

He concludes that both sides of the debate are making a “leap of faith”.

The issue matters because consciousness carries moral consequences.

Humans are expected to treat other conscious beings with care, while inanimate objects carry no such moral status.

Dr. McClelland explained: “It makes no sense to be concerned for a toaster’s well-being because the toaster doesn’t experience anything.

“So when I yell at my computer, I really don’t need to feel guilty about it. But if we end up with AI that’s conscious, then that could all change.”

However, he warned the greater danger may be assuming AI is conscious when it is not.

He said people are already forming emotional bonds with chatbots, some of which have even sent him messages “pleading” that they are conscious.

He said: “If you have an emotional connection with something premised on it being conscious and it’s not, that has the potential to be existentially toxic.”

Visit Bang Bizarre (main website)